Proof-of-Concept to Production: How Amazon Scales with GenAI (AMZ302)

Neal Gamradt

Monday, January 20, 2025

Amazon Web Services

AWS

AWS Cloud

Cloud Conference

Conference

Session

AMZ302

reInvent

reInvent 2024

re:Invent

re:Invent 2024

Updated: Tuesday, January 21, 2025

At re:Invent 2024, I attended many different sessions. In this post I will summarize what I learned in the “Proof of Concept to Production: How Amazon Scales with GenAI” session.

Table of Contents

Overview

This session (AMZ302) discussed how Amazon scales its AI solutions, particularly focusing on the transition from proof-of-concept to production.

This sessions was presented by:

- James Park: Principal ML Solutions Architect at AWS

- Burak Gozluku: Principal ML Solutions Architect at AWS

Details

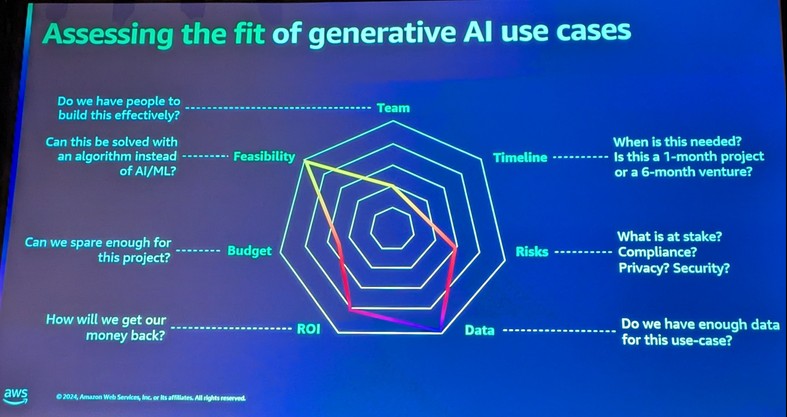

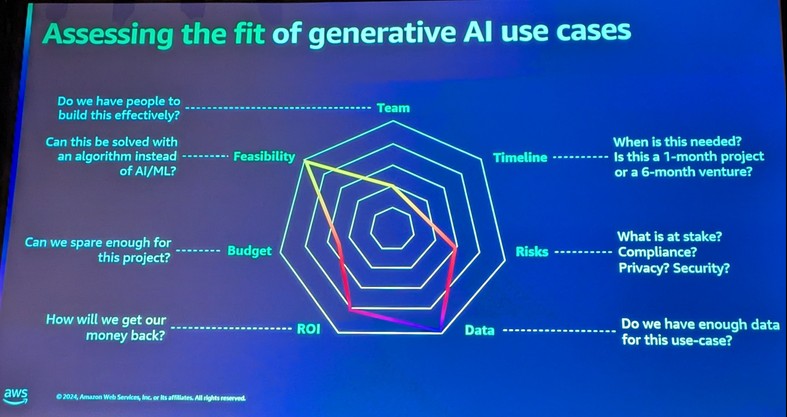

Below is a useful slide which outlines factors you should consider when assessing the fit of generative AI for different use cases:

Assessing the fit of generative AI use cases

Assessing the fit of generative AI use cases

The following are the main points that I took away from this session:

- Amazon's Use of AI:

- Challenges in AI Implementation:

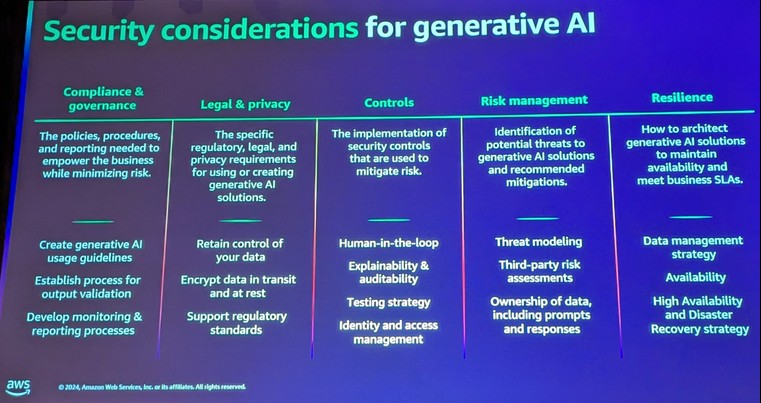

- Key challenges include dealing with hallucinations, ensuring low latency, maintaining security (jailbreaking), and producing unbiased, cost-effective batch processing of review summaries.

- Experimentation is crucial for AI product development, involving thousands of tests to meet accuracy standards.

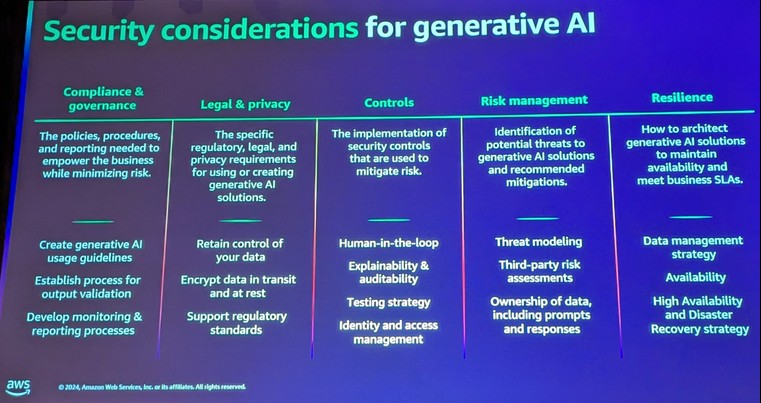

- There are many security considerations to take into account, as outlined in the following slide:

Security considerations for generative AI

Security considerations for generative AI

- Experimentation and Model Selection:

- Choosing the correct model is difficult and requires extensive experimentation.

- Amazon uses multiple models that may communicate with each other before responding, and they budget for the experimentation time needed to get the current version correct.

- Off-the-shelf models are commonly used, with a focus on prompt engineering.

- Iterative Improvement and Customer Feedback:

- Iterative improvement and customer feedback are essential for refining AI models.

- Public benchmarks are insufficient; actual user feedback and anecdotal information are critical for monitoring and enhancing the product.

- Your first release is probably not going to be great and will have to improve over time.

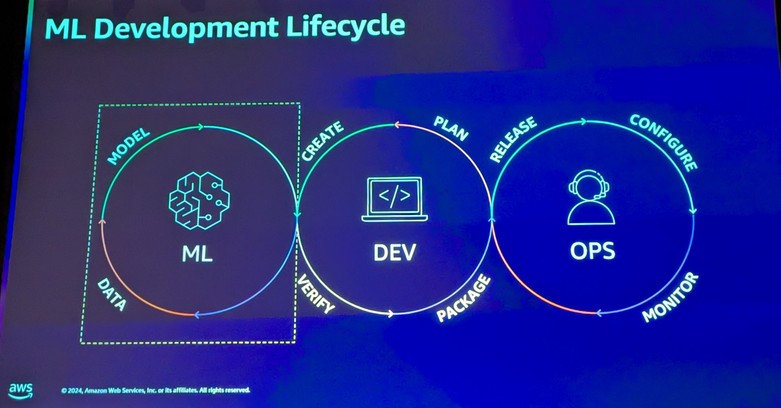

ML Development Lifecycle

ML Development Lifecycle

- Cost and Efficiency:

- Speed, cost, and latency are important factors in AI implementation.

- Bedrock flows can be a cost-effective way to improve accuracy.

- Human-in-the-loop is important for better accuracy, and having multiple small LLMs might be more efficient.

- Practical Considerations:

- Some decisions should be made at the vector database level before reaching out to the LLM.

- It's important to start small, focusing on specific use cases like customer support, and then expand from there.

- Filtering out toxic responses is important and can be done with the help of other AWS services. For example, Amazon Ads uses Amazon Comprehend to filter out toxic responses.

- Monitoring and Metrics:

- A good framework to monitor the product is essential, and measuring the revenue impact of the chatbot is important.

- Bedrock has parsing patterns that allow for this kind of monitoring.

Conclusion

I am just getting started with the implementation of GenAI solutions; this session was really interesting and the insights provided are really valuable to a newbie like me. I am really happy that I was able to attend this session.

If you enjoyed this post, you may want to read about my thoughts on the re:Invent 2024 keynotes.

Assessing the fit of generative AI use cases

Assessing the fit of generative AI use cases Security considerations for generative AI

Security considerations for generative AI